Hey Steemians , This is another video in the MIT Race Car Series project.

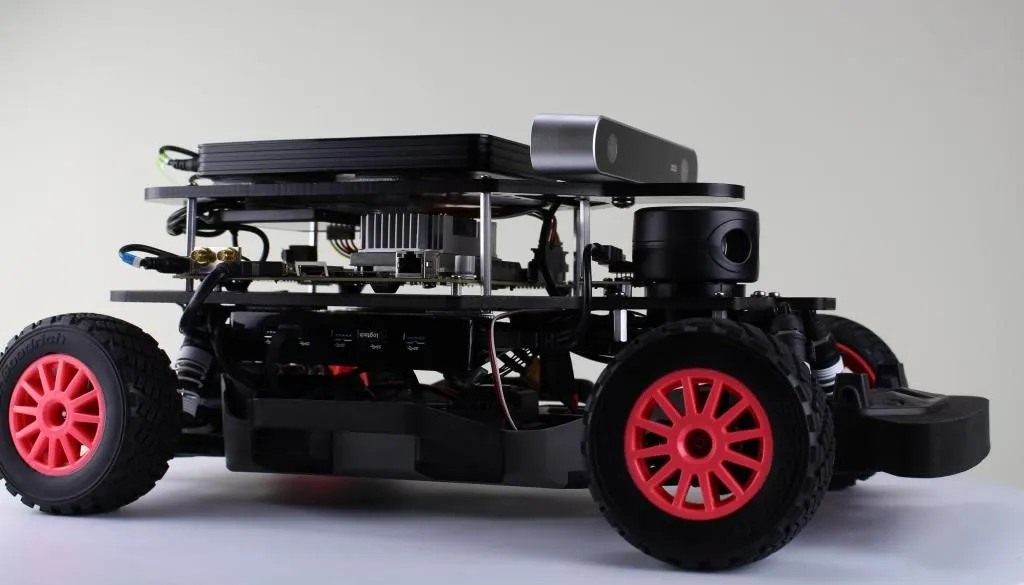

In this Video we carried out a few preliminary tests to test the movements by using depth sensors and Zed Camera.

If you would like to configure your car just like the one you see in the video i would recommend following this Guide .

Hardware Tested In this Video

The reason why we use the ZED camera is because it enables Real-time 3D Mapping which is essential in identifying objects and thereby enabling the robot in making smart decisions.You may find some other Camera's which may work just as well but this was the equipment provided to us for completing this project.

All the Code written for Testing Depth Sensing is included on the Github Repository here .We used Python for implementing these sensors as there are a collection of default Libraries in python which will make our life easier.

For starter's you will require the following imports to use the PyZedCamera

import pyzed.camera as zcam

import pyzed.defines as sl

import pyzed.types as tp

import pyzed.core as core

After importing all the required libraries you can now create an Object of type PyZEDCamera and use all the additional features of PyZedCamera

zed = zcam.PyZEDCamera()

Now You may initialize the depth mode and and specify the depth measurements required.

init_params = zcam.PyInitParameters()

init_params.depth_mode = sl.PyDEPTH_MODE.PyDEPTH_MODE_PERFORMANCE # Use PERFORMANCE depth mode

init_params.coordinate_units = sl.PyUNIT.PyUNIT_MILLIMETER # Use milliliter units (for depth measurements)

Finally here is the code which will allow your robot to assess depth in various images and overwrite the Electronic speed controller thereby enabling your bot to make smart decisions

# Open the camera

err = zed.open(init_params)

if err != tp.PyERROR_CODE.PySUCCESS:

exit(1)

# Create and set PyRuntimeParameters after opening the camera

runtime_parameters = zcam.PyRuntimeParameters()

runtime_parameters.sensing_mode = sl.PySENSING_MODE.PySENSING_MODE_STANDARD # Use STANDARD sensing mode

i = 0 #counter

image = core.PyMat()

depth_for_display = core.PyMat()

print('Current mode: Capture {} images as fast as possible.\nMerge the images.\nSave to pickle files.'.format(num_images))

while i < num_images:

# JOYSTICK

pygame.event.pump() # keep everything current

throttle = (j.get_axis(throttle_axis)+1)/2 # left stick

steering = (j.get_axis(steering_axis)+1)/2 # right stick steering

left_bumper = j.get_button(left_bumper) # left bumper is deadman/ controls whether you are saving

exit_button = j.get_button(exit_axis) # Options button exits

if exit_button:

print('Exit button (options) pressed. Stopping data collection')

break

if left_bumper:

# A new image is available if grab() returns PySUCCESS

if zed.grab(runtime_parameters) == tp.PyERROR_CODE.PySUCCESS:

# Retrieve left image

zed.retrieve_image(image, sl.PyVIEW.PyVIEW_LEFT)

# Retrieve left depth

zed.retrieve_image(depth_for_display,sl.PyVIEW.PyVIEW_DEPTH)

#convert to arrays

data=image.get_data()

depth_data=depth_for_display.get_data()

# Convert images to smaller square images

square_image_size=500

data=cv2.resize(data,(square_image_size,square_image_size))

depth_data=cv2.resize(depth_data,(square_image_size,square_image_size))

merged = merge_images(data,depth_data)

print('writing dataset/image_{0}_{1:.4f}_{2:.4f}.pickle'.format(i,throttle,steering))

if (i%25==0):

print('{} images have been captured'.format(i))

pickle.dump(merged,open( 'dataset/image_{0}_{1:.4f}_{2:.4f}.pickle'.format(i,throttle,steering), 'wb' ))

else:

print('image collection failed')

# Increment the loop

i = i + 1

j.quit()

print('Image capture complete')

# Close the camera

zed.close()

Hope you enjoyed this post, feel free to reach out if you would like to replicate this project or have any questions regarding this project.

▶️ DTube

▶️ IPFS