Summary: A model which distributes GRC directly proportional to FLOP contributions is proposed. This necessitates an equivalence between CPUs and GPUs; this is done on the basis of energy consumption, and so as a side result this model approximates the energy consumption of the GridCoin network. Pseudocode is included, which can also be viewed as a summary if you want to skip the justifications. Benefits include a GRC value tied to a fundamental physical asset (the Joule), accurate predictions of expected GRC based on hardware, and an incentive-compatible distribution of research-minted GRC.

Hi Everyone:

This is a model for comparing CPUs and GPUs in the BOINC and GridCoin networks. The ultimate goal is to achieve a more incentive-compatible GRC distribution in the GridCoin reward mechanism. This model distributes GRC based on floating point operation contributions, and as a side result also closely approximates the energy consumption of the GridCoin network. To understand the motivation for such a model, check out @sc-steemit's post on problems in the GridCoin reward mechanism. For an analysis of this problem from the perspective of incentive structures, I refer you to my previous posts on this topic here and here, where I laid out the motivations for these calculations. If you need a refresher on hardware, I refer you to @dutch's post on difference between CPUs and GPUs, and @vortac's post on the difference between FP32 and FP64. Here I focus less on incentives and more on the math underlying the CPU/GPU comparison proposal.

Please remember that this is just a proposal. Feel free to poke and prod, that is how it gets better.

Terminology

- CPU = Central Processing Unit

- GPU = Graphical Processing Unit

- FP = Floating Point

- FLOP = Floating Point Operation(s)

- GFLOP = GigaFLOP = 10^9 FLOPs = a billion FLOP

- FLOP/s = FLOP per second

- GFLOP/s = GigaFLOP per second

- FP32 = Floating Point 32-bit = Single-Precision

- FP64 = Floating Point 64-bit = Double-Precision

- J = Joule = Unit of Energy

- W = Watt = Joules/Second = Unit of Power

Comparing CPUs and GPUs

In response to @hotbit's question in the comments of this post, I use as examples the Intel i5-6600K, NVIDIA GTX 1080, and AMD HD 7970, and then generalize to the case where we have varying amounts of different types of hardware (as BOINC and GridCoin have).

Specifications for the three devices:

| Specifications | Intel i5-6600K | AMD HD 7970 | NVIDIA GTX 1080 |

|---|---|---|---|

| CPU FP GFLOP/s | 178 | N/A | N/A |

| GPU FP32 GFLOP/s | N/A | 3788.8 | 8227.8 |

| GPU FP64 GFLOP/s | N/A | 947.2 | 257.1 |

| Peak Power Consumption (Watts) | 91 | 250 | 180 |

Source for Intel i5-6600K; Source for AMD 7970; Source for NVIDIA 1080.

The following table shows FLOP per unit of energy = FLOP/J = (FLOP/s) / W = (FLOP/s) / (J/s) = FLOP/J for the three devices.

| FLOP/J | Intel i5-6600K | AMD 7970 | NVIDIA 1080 |

|---|---|---|---|

| CPU FP GFLOP/J | 1.96 | N/A | N/A |

| GPU FP32 GFLOP/J | N/A | 15.2 | 45.71 |

| GPU FP64 GFLOP/J | N/A | 3.79 | 1.42 |

If we want to compare the CPU and the 7970 based on energy consumption, we would say 1.96 GFLOP on the CPU = 15.2 GFLOP in FP32 on the 7970 = 3.79 GFLOP in FP64 on the 7970 (note: if we had an old, inefficient GPU, and a new, efficient CPU, this would not be a proper comparison; more on this later). If these were our only two options, we would be done. But we still have the 1080 here, and in BOINC, we have a total of thousands of different CPUs and GPUs, and varying amounts of each (not just one of each). My proposed solution is to take the weighted average of all of these devices.

First, I will give an example where I include the GTX 1080 into the above calculation, and then generalize to the more realistic case.

The average contributions of the 7970 and 1080 on FP32 = (15.2 + 45.71)/2 = 30.5, and for FP64 it is (3.79 + 1.42)/2 = 2.6.

So with these three pieces of hardware, the ratio of of CPU : FP32 GPU : FP64 GPU would be 1.96 : 30.5 : 2.6.

Now at this point you may be asking, how is this a fair comparison? I'll give you an example. For simplicity, I am going to use the numbers above, although this argument can be easily generalized. Also for simplicity, I am going assume that I receive exactly 1GRC for doing the work mentioned above (i.e., doing either 1.96 GFLOP on my CPU, or 30.5 GFLOP FP32 on my GPU, or 2.6 GFLOP FP64 on my GPU, since I am claiming these are equal in value).

I summarize all of these calculations in the next table.

Suppose we have a WU (work unit) from a BOINC project that is CPU only, and it requires exactly 1.96 GFLOP (how convenient!). Furthermore, suppose that we have another WU that requires 30.5 FP32 GFLOP, and a third WU that requires 2.6 FP64 GFLOP.

I can only run my Intel i5-6600K on the first task; that will take 1J of energy and I will get 1GRC for it.

On the second task, I can run either my 7970 or my 1080. If I use my 7970, it will take (30.5 GFLOP) / (15.2 GFLOP/J) = 2.00J of energy and I will get 1GRC for it. If I use my 1080, it will take (30.5 GFLOP) / (45.71 GFLOP/J) = 0.667J and I will get 1GRC for it. Notice how I used only 1/3 of the energy for the 1080 than I did for the 7970, to make the same amount of GRC. If you look at the second table, this should make sense, as the 1080 is 3 times more efficient than the 7970 in FP32.

On the third task, we will see the same effect as in the second, but in reverse, since the 7970 is more efficient than the 1080 in FP64. If I use my 7970, it will take (2.6 GFLOP) / (3.79 GFLOP/J) = 0.686J of energy, and I will get 1GRC for it. If I use my 1080, it will take (2.6 GFLOP) / (1.42 GFLOP/J) = 1.83J, and I will get 1GRC for it. So it takes more energy on my 1080, as expected.

| Energy (in J) Required to Mint 1GRC | Intel i5-6600K | AMD 7970 | NVIDIA 1080 |

|---|---|---|---|

| Task 1 (CPU only) | 1 | N/A | N/A |

| Task 2 (FP32) | N/A | 2 | 0.667 |

| Task 3 (FP64) | N/A | 0.686 | 1.83 |

As you can see, this preserves the fact that better hardware gets more GRC, leaving nothing unchanged on that front.

For the more general case, we just take a weighted average of all the hardware. For example, suppose we have the following setup:

| FLOP/J | CPU A | CPU B | CPU C | GPU D | GPU F | GPU G |

|---|---|---|---|---|---|---|

| Number of this type of hardware | 7 | 13 | 2 | 23 | 1 | 19 |

| CPU FP GFLOP/J | 3 | 2 | 4 | N/A | N/A | N/A |

| GPU FP32 GFLOP/J | N/A | N/A | N/A | 32 | 27 | 89 |

| GPU FP64 GFLOP/J | N/A | N/A | N/A | 16 | 5 | 22.5 |

The weighted average for the CPUs would be (7x3 + 13x2 + 2x4) / (7 + 13 + 2) = 2.5.

The weighted average for the GPUs in FP32 would be (23x32 + 1x27 + 19x89) / (23 + 1 + 19) = 57.1.

The weighted average for the GPUs in FP64 would be (23x16 + 1x5 + 19x22.5) / (23 + 1 + 19) = 18.6.

So the equivalence ratio (ER) here would be 2.5 : 57.1 : 18.6.

Please note that this ER, as produced by the method above, should properly look like 2.5 GFLOP/J CPU : 57.1 GFLOP/J FP32 : 18.6 GFLOP/J FP64. However, we can drop the G in GFLOP and the J, so that it looks like 2.5 FLOP CPU : 57.1 FLOP FP32 : 18.6 FLOP FP64. This is useful in understanding the pseudocode.

The Law of Large Numbers and Theoretical Reference Hardware

This equivalence relation could be an unfair comparison if we only take into account a small set of hardware. Since there cannot be a true comparison between CPUs and GPUs, I am relying on an assumption: since hardware progressively becomes faster and more energy efficient, and since there is high demand for both CPUs and GPUs, this progression over time is roughly equivalent between them.

With that assumption in mind, this problem is addressed by the law of large numbers. Since the number of BOINC users numbers in the thousands, the relative sophistication distribution of the resulting collection of hardware (CPU vs. GPU) should be roughly equal.

The resulting equivalence ratio can be thought of as a comprising a theoretical CPU and theoretical GPU, that are equivalent in the sense that running 1J on the CPU is "worth" the same as running 1J on the GPU in both FP32 and FP64.

This is easily the shakiest proposition that I am making, and there certainly could be a better method of calculating the ER. What I am curious about is 1) what do you think of the concept of the ER/theoretical reference hardware, and 2) how do you think it can be made more precise? Is there a more accurate statistical distribution that would yield a more realistic ER?

Pseudocode

As you may have noticed, there is a serious problem with this equivalence. Namely, it assumes that the hardware present in the BOINC network is constant. This is clearly not true, as new users join and existing users leave on a daily basis, not to mention the fact that these hardwares are not necessarily running 100% of the time with 100% of the cores. As with the old vs. new hardware problem mentioned above, this introduces a level of uncertainty that cannot be perfectly fixed.

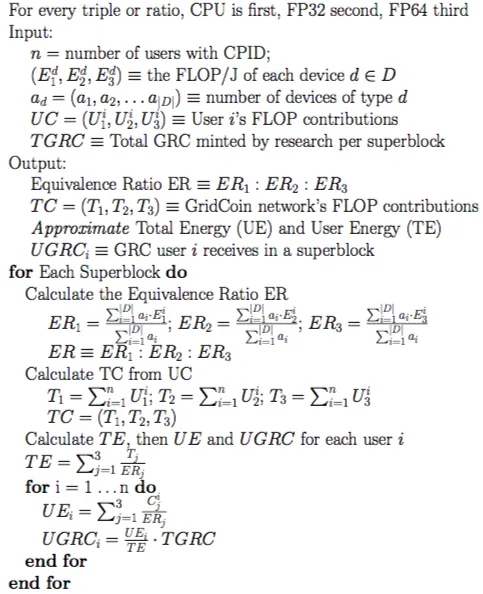

However, I do think that this problem can be sufficiently addressed by taking a survey of existing hardware between each superblock. The equivalence equation above could be updated once every superblock. Then the user's contribution to each type of calculation within a superblock would be divided by the total GridCoin network's contribution to each type of calculation. The resulting three numbers would be the user's contributions as a percentage of the total contributions for CPU, FP32, and FP64. Then using the equivalence relation, we can determine how much GRC a person would receive; outline below.

Summary of proposal in pseudocode. Please note that in the calculations of UGRC_i = UE_i / TE, we cannot cancel the ER_j - you have to take the sum first.

Here is another way of thinking about it: your contribution to CPU will be divided by the total amount of CPU contributions, and then multiplied by the number of GRC minted by CPU-based research per superblock; same for FP32 and FP64. How do we determine how much GRC is minted for CPUs, FP32, and FP64? With the total FLOP for each and equivalence relation.

Thus, you are guaranteed to receive GRC exactly proportional to your contributions to CPU, FP32, and FP64 individually. Uncertainty arises when we try to equate these three using the ER - which effectively converts those FLOP to Joules based on the weighted average of hardware in the network.

Last Issue

How can we calculate the FLOP contributions of each user? Currently there is no way to do this. I think the series of @parejan on the expected return of each type of CPU is a good source of data. In their most recent State of the Network, @jringo and @parejan listed the computational power in GFLOPS for most of the project - this is another good source of data. Finally, there is WUProp@home, which has as its goal to

collect workunits properties of BOINC projects such as computation time, memory requirements, checkpointing interval or report limit.

Many thanks to @barton26 for mentioning this project after this past Thursday's Fireside Chat, and for running it, if I remember correctly. Based on the responses to this post, my next task may be to analyze the data collection necessary to calculate the FLOP contributions of each user.

Benefits

Minted GRC would be tied to a physical asset, the Joule. This has several benefits, including

a close approximation for amount of energy necessary to mint GRC

GRC could become associated with another, perhaps green-energy based coin (thanks @jringo for mentioning this possibility after the Fireside Chat)

it would be very easy to predict how much GRC a person would receive with their hardware

similarly to Bitcoin, it "costs" something to make a GridCoin; it always cost something, but now it would be a stable/predictable amount

You can receive the same amount of GRC by running your hardware on any project you like, so you crunch the project you want the most, and not be punished for it, as you currently would be if you crunch a popular project.

Projects will be rewarded only if they have WU.

Conclusion

What are your thoughts?