Introduction

Artificial Intelligence is quickly becoming a part of our reality. We can travel by self-driving cars, talk to our voice assistants and observe the best human players in the world being beaten by a computer. However, simultaneously with incredible possibilities it brings serious dangers, which involve anxiety among people. In this article we will confront common concerns about the future of AI.

Humans will lose their jobs to AI

Do you know any lamplighter? Milkman? Natural consequence of technological development is we are gaining possibility to automatize and replace some human occupations. It has always been like that, however, AI arouses the biggest anxiety, as it is extremely effective in repetitive tasks and can find an application in various industries.

In 2013, scientists from the University of Oxford analyzed the probability of particular occupations being automated. They have been evaluated based on 3 categories:

- emotional intelligence

- creativity

- manual dexterity

Those are qualities that currently differ us from AI and make us more or less irreplaceable. Occupations that do not demand any of those are encumbered with the highest risk. According to the scientific research such professions as telemarketer, jeweler, cashier or model have 95% chance of being automated in the near future.

We need to remember that implementing technology around us is generally aimed to make our life easier. Obviously this approach results in some professions becoming unnecessary, but simultaneously it opens up many new opportunities. Hundred years ago the word "programmer" has not even existed, while nowadays it is one of the most popular occupations.

Responsibility of AI

The way AI should be making decisions while controlling a car is a serious moral dilemma nowadays. Should it decide to swerve passengers into a wall in order to avoid a group of pedestrians on the road? Or maybe passengers should be a priority, like in case of a human driver? Probably the answer is obvious, as from mathematical point of view it is better to save more people. However, awareness that your car may decide to kill you is frightening.

If AI makes a mistake on the road and accidentally kills a human, like in case of a self-driving Uber in Arizona, who should be responsible? Programmers who developed this AI? Owner of the car? If a computer program made a mistake, it implies that every copy of this program can make the same mistake in the future. What would it mean for other self-driving cars?

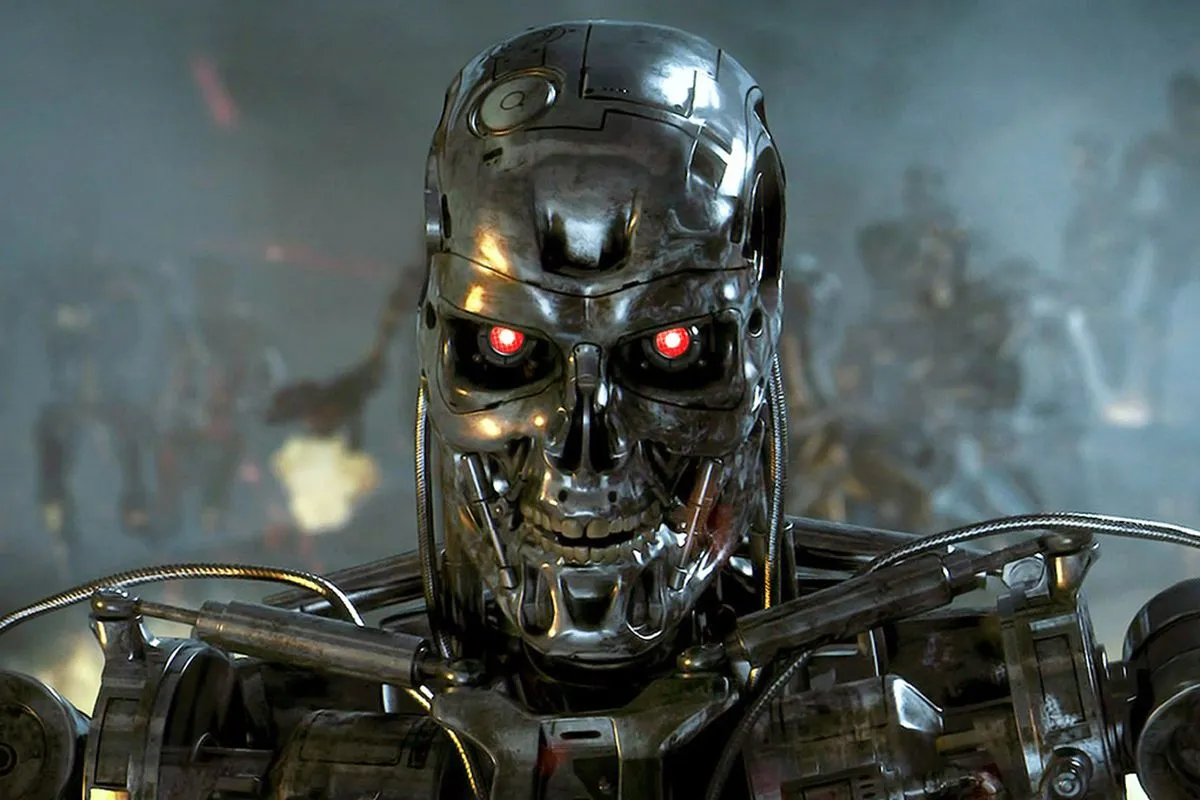

AI can be used to hurt people

Israel and South Korea are already using killer robots, which on auto mode can autonomously decide whether to kill a human or not. This sounds frightening, but we need to clarify one thing. Humanity is not yet building machines with strong AI, which could think abstractly or experience consciousness - we are just building machines that are extremely good at learning specific tasks, which is called weak AI.

For that reason, weak AI cannot act like human being - basing its decision on emotions, ideology or faith. It is just a computer program that effectively recognizes patterns and makes decision accordingly. Therefore, its choice is based solely on a complex mathematical calculations taking into consideration circumstances in a particular situation. It is not a personal decision of a superintelligent autonomous robot.

Things become more complicated once strong AI is developed. Actually, we do not know very much about the way that human brain works, so fully simulating it on a computer may bring unpredictable effects. For example, military robots may unexpectedly switch sides. Stephen Hawking once said:

Unless we learn how to prepare for, and avoid, the potential risks, AI could be the worst event in the history of our civilization. It brings dangers, like powerful autonomous weapons, or new ways for the few to oppress the many.

In 2017, 116 leading technology experts, including Elon Musk, signed an open letter calling on the United Nations to ban the development and use of AI weaponry. As can be seen, the problem is treated really seriously.

AI may be able to control humans

However, force is not our main danger coming from AI. On the earth there are dozens of species that are more powerful than humans. Thanks to our brains, we have learned how to control them and gain the advantage. If strong AI is developed, it will certainly become more intelligent than any human, which consequently may lead us to become the controlled ones. The obvious question is: when will it happen and how avoid this scenario?

Elon Musk wrote on Twitter:

Probably closer to 2030 to 2040 imo. 2060 would be a linear extrapolation, but progress is exponential.

Actually, this may happen never or much earlier than we expect. In 2015, many experts underestimated the ability of AI to play Go, predicting it would overtake humans by 2027. It eventually took two years instead of twelve.

We know really little about the way strong AI would operate, so we are not even sure if its development is possible, to say nothing about the potential date. However, we still need to ensure that humanity is prepared to such breakthrough.

In order to peacefully coexist with a human-level AI, it needs to have human values implemented. It has to evaluate the surrounding word beyond the "cold calculations" and treat us like we treat other humans. It needs to understand the sense of morality, loyalty and social responsibility.