I've been looking to replace my NAS for a while. I've considered all my options for commercial products, primarily Synology or QNAP and decided to build something.

The primary reason to build something myself is to have fast CPUs, more drive bays, ability to repair components if they break with off the shelf components, and use ZFS.

ZFS

If you are not familiar with ZFS, it is a file system traditionally used on Solaris hardware but has been ported to Linux and FreeBSD. The most popularly used solution is FreeNAS an enterprise-class product with commercial level support.

- Copy-on-write support

- Snapshots

- Replication

- Efficient Compression

- Caching

- Bit Rot Protection

While ZFS is an amazing file system, it does have one large flaw that can be problematic for most users. If you want to increase your storage, you cannot add to existing arrays and must create new ones. If you have a RAID 5 array with 4 drives, and you want to add a 5th, you will need to create a new Raid 5 array with at least 3 drives. You need to plan ahead of time how much storage you will need before you replace the hardware or leave enough room to grow with additional disks.

Because I am not downloading porn and movies like most large NAS home users, my storage needs are a bit more predictable.

NAS Software

There are two major players in the do it yourself NAS

- FreeNAS

- Unraid

There are a few other options, but those are the two most popular options.

I really didn't like either option, FreeNAS is what I am most interested in as it is enterprise-class software and uses ZFS. It has a lot of features but most of the features are poorly implemented. The storage functionality is good, but the Virtual Machine and other functionality are very weak.

Unraid is a very interesting option, it has modern Virtual Machine support, fantastic UI, but it is slow as shit unless you use SSD caching and schedule writes to the array during downtime. It is extremely flexible in terms of adding storage down the road one disk at a time due to the named parity drives but suffers greatly when it comes to writing performance.

I decided to choose the path less traveled and will be using Ubuntu and ZFS directly. I will configure and setup VM and sharing software myself. This is a lot more work initially but will allow me to get excellent performance, high reliability, and great performance running virtual machines.

The Build

I am not 100% decided on all the hardware, but this is what I think I am going to order on Monday.

Case

Rosewill 4U 15 Bay Case

Case Upgrades

The fans in the Rosewill suck, they are always at 100% from what I understand, so I will buy fans to replace them. The case uses 2 80mm fans and 7 120mm fans. I will be removing the front two fans and reversing the fan tray with 3 120mm replacement fans. This will greatly increase airflow and cooling and allow for variable speed (lower noise).

Motherboard

I am going to go with a refurbished Gigabyte GA-7PESH2. This is an older but very high-end server motherboard with the following key features.

- Dual 10Gbit

- 2 Mini SAS 6Gbps ports (supports 8 drives)

- Dual Xeon

- 16 Memory Slots

- IPMI

This board supports any of the Xeon 5th generation (26xx) processors.

CPU

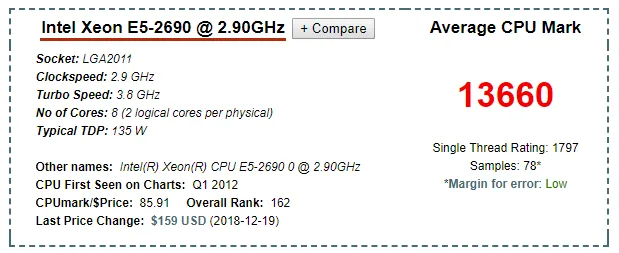

I plan on using dual Xeon 2690 v1 processors. These processors have a passmark score of 13660 with single threaded performance at almost 1800. They will turbo up to 3.8Ghz, although they are old performance is really good for cheap money.

The v2 processor adds two additional cores but is a tiny bit slower and much more expensive.

CPU Cooling

I am going with Arctic Freezer 33 CO coolers, they can do up to 150W TDP cpus, which is more than enough for the 135W TDP Xeon 2690's. Of course, I will need two and will also need some thermal paste.

I am going to use Gelid Solutions GC-Extreme Thermal Compound as it is the best rated available.

Ram

I am going to use ECC DDR3 ram and plan on either 64GB or 128GB. I still haven't decided as it depends on how many virtual machines I plan on running.

One thing about ZFS is it is very ram hungry and I expect to use about 16GB to the operating system and ZFS usage.

Drives

I am not 100% sure what I will use for drives, right now I am leaning towards Hitachi Ultrastar 4TB 7200 RPM SAS drives or new WD Red 10TB drives.

The Hitachi's are dirt cheap as they are pulls from enterprise systems. They are enterprise drives and are 7200 RPM but only have a 64MB buffer. I would be able to get 15 of these drives for $750-$900. As they are enterprise class drives, they tend to last a lot longer, although they already have a lot of hours on them, drives fail in a bathtub curve. This means they typically fail either in the first few day or weeks or some time after the rated failure rates. With many cheap drives, it is easy to replace any failures should they happen, even though this should be rare.

The other option is new shucked Western Digital Red 10TB drives. These are NAS rated drives but are not enterprise class. They have a much larger buffer (128Mb to 256Mb) and have a much better warranty. The cost is significantly higher with a single drive running $200-290. A full rack of 15 of them will run $3,000 - $4,350 USD.

I'm still deciding what I want to do, but if I went with the Reds I wouldn't fill the enclosure and likely only get 8 drives. I don't need a massive amount of storage, so a higher spindle count is ideal and why I am leaning towards the 4TB HGST drives.

I also have to decide if I want to go Raid 6 or 7. In ZFS land this is raidz2 or raidz3, meaning two or three parity drives. I am leaning towards raidz2 with two drives for parity. If I have alerts set up to notify me when a drive is going to fail or has failed, I can initialize a replacement right away and avoid a second drive failure. If a second drive does fail I will still be in business. But 15 drives is a lot of drives for a single array, which increases the chances of multiple drive failure.

If I go with two drives parity, I will get 52 TB of storage from the HGST enterprise drives and 60 TB of storage from the WD Reds with only 8 disks.

If I go with three drives parity, I will get 48 TB of storage with the HGST and I wouldn't even consider raidz3 with only 8 disks, so I'd still have 60 TB with the WD Reds.

Raidz3 would significantly slow down the array and mostly recommended if you don't respond to disk failures immediately. This means having a spare 4TB HGST would be smart and relatively cheap ($50-60).

Operating System and Boot Drive

I am going to use a pair of 256GB or SATA SSD drives for the operating system in Raid 1 configuration. It is important to have the operating system on a dedicated drive although it is possible to have it on a ZFS pool.

I could very easily use a flash drive for the operating system, but I want to have redundancy and a bit more performance. I also may have more than just ZFS running on the core operating system.

A 256GB SATA SSD drive can be bought new for around $30-50, so a pair of them dedicated to booting is not a big expense, but still a lot more than a $5 flash drive.

SAS Expander

The motherboard supports up to 8 6Gbps SATA drives via the two Mini SAS ports. You can feed this into a SAS Expander and use up to 24 drives. Although the case only supports 15, it is still required to go over 8 drives.

Power Supply

I have a couple of these brand new for when I was building mining rigs. These have two CPU cables so I won't need to worry about a splitter. Plus they have just been sitting on my shelf in the closet doing nothing.

Cables

I am going to need Mini SAS to SAS cables, each of the two Mini SAS ports on the motherboard can break out to 4 SAS/SATA drives.

I will also need additional SATA power connectors as power supplies don't have enough to feed 17 drives. I will pick up five of these but I am not sure how many I will need.

Misc

I will need a 10Gbit network card for any machine I want to get full performance from the NAS. I am going to go with the Intel 540 dual 10Gbit card, it is the same controller on the motherboard.

I recently ran Cat 6e plus throughout the house which supports 10Gbit. I haven't tested them for 10Gbit but haven't had a need. I will likely only need a direct line from my NAS to my main workstation as I don't see a real need to feed all my devices with 10Gbit. This saves me having to buy a 10Gbit switch, which is still a bit out of the mainstream for a few more years. I currently use a 24 port 1Gbit switch with 12 PPOE ports.

I will run a patch panel between the NAS and my machine on a dedicated 10Gbit port until I have the need for a 10Gbit switch. The Intel 540 supports automatic MDI/MDI-X configuration so I won't need a crossover cable.

I am heavily leaning towards 16x4TB HGST drives running raidz2 for a total of 52 TB of storage and one cold spare. I'm guessing I'll be around 800MB/s read/write and 16 Xeon cores for around $2,000 USD.

I will put together a post when I start building and have some benchmarks provided everything is successful.

All images not sourced are CC0 or from Amazon