Repository

https://github.com/utopian-io/review.utopian.io

Components

The Review Questionnaire is the place where reviewers submit their answers and get the score for a pending contribution. While most categories may have a very streamlined experience with it, the translations' questionnaire has many issues.

The most common complaint about the questionnaire is the difficulty question. There are 4 difficulty levels (Easy, Average, High and Very High) and there is a lot of unfaireness on how each project is scored.

Most projects are rated as "Average Difficulty", even though they aren't, as going for "High" will increase the score dramatically.

Since a few days ago, the Utopian voting bot was updated with new voting logic to make sure no great contributions will go unvoted (while not-that-great ones get their upvotes), there is another step to make the whole process better: Change the questionnaire.

Instead of presenting each question here, and then propose my changes in the next session, I decided to merge both the presentation and the proposal on each question below, just to make it easier to comprehend.

The DaVinci team is already gathering their own proposals for the questionnaire, so we will have the best and fairest questionnaire in no time!

Proposal Description

I'll present each question, together with its issues and proposals in sections to make it more readable.

How would you describe the formatting, language and overall presentation of the post?

This question is too generic and could be broken in 2 parts. The first part should be about the formatting and overall presentation of the posts. A reviewer should take into account if there are images/videos and if the author has taken steps to make the reading experience better and easier on the eyes.

The second part would be about the language (are there syntax mistakes? did the author forgot/misused punctuation marks like I'm doing in this post?), if the post is trying to engage the visitors in conversation and try to get them learn more about the project and/or the category.

How would you rate the overall value of this contribution on the open source community?

This question should not be in the questionnaire. It might make sense, but all contributions are valuable. And since most contributions get "This contribution adds some value to the open source community or is only valuable to the specific project," the question shouldn't make a difference in the score.

What is the total volume of the translated text in this contribution

With the way the questionnaire is now built, there is no score change if you translate 1000 words, 1200 words, 2000 words, 5000 words and so on, so most translators are translating 1150 words on average. But if someone translates less words, they are getting penalised (the total volumes the questionnaire has, are: Less than 400 words, 400 - 700 words, 700 - 1000 words, More than 1000 words).

There should be more volume levels (at least 2) and the current levels should be changed. My proposal is to have the following volume levels:

- More than 3000 words

- 2500 - 3000 words

- 2000 - 2500 words

- 1500 - 2000 words

- 1000 - 1500 words

- 500 - 1000 words

- Less than 500 words

How would you rate is the overall difficulty of the translated text in this contribution?

This question is the one that makes our reviewing lives difficult. There are 4 difficulty levels (Extremely High, High, Average and Low), and each step is removing a big chunk of the score. My proposal is to have 7 (if not more) difficulty levels, and each project should have a predefined difficulty level:

- Very High

- High to Very High (middle ground between 1 and 3)

- High

- Average to High (middle ground between 3 and 5)

- Average

- Low to Average (middle ground between 5 and 7)

- Low

There should also be an extra question that takes into account the actual difficulty of the project in each language and gives a small bump (or penalty) to the score. The reviewer could rate the "language" difficulty of each project as:

- Very Difficult

- Difficult

- Neutral

- Easy

- Very Easy

How would you rate the semantic accuracy of the translated text?

This question is also too punishing for the contributions. We are trying to choose an answer with our own metrics, but that's a problem. One reviewer might choose "Good" instead of "Very Accurate" with 1 mistake, while the other might choose "Very Accurate" with 3 mistakes.

Of course, this one depends on the impact each mistake has on the translation. Forgetting to translate half a string has a bigger impact, than using a synonym. The questionnaire should move to a "count of mistakes" type of answer. This one needs a lot of thought (to make clear what counts as a mistake). It could also be broken up into 2 questions:

- How many serious issues did you find in this contribution?

- How many non-altering mistakes did you find in this contribution?

New question proposal:

Another question that we could have added, is one that gives a little incentive to encourage better communication between a translator and a reviewer. An extra point could be given if the translator responds promptly, and a point should be subtracted if the translator was responsive to a reviewer's communication.

Mockups / Examples

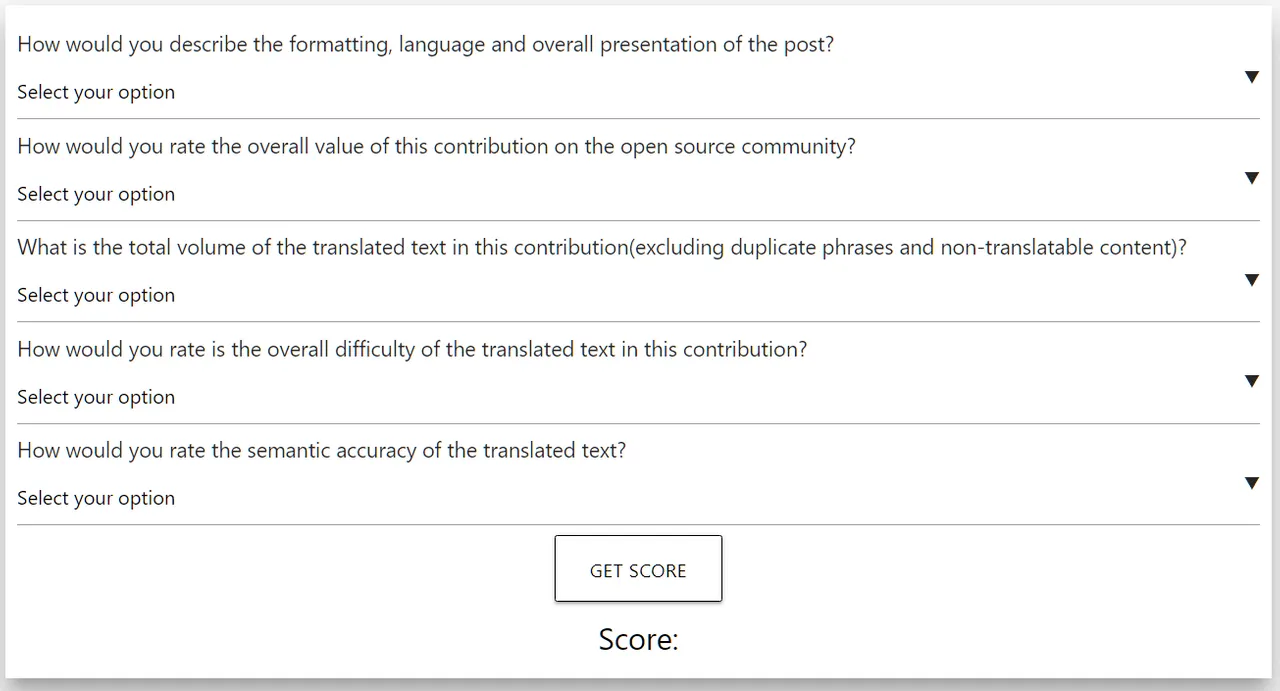

This is how the questionnaire is currently structured:

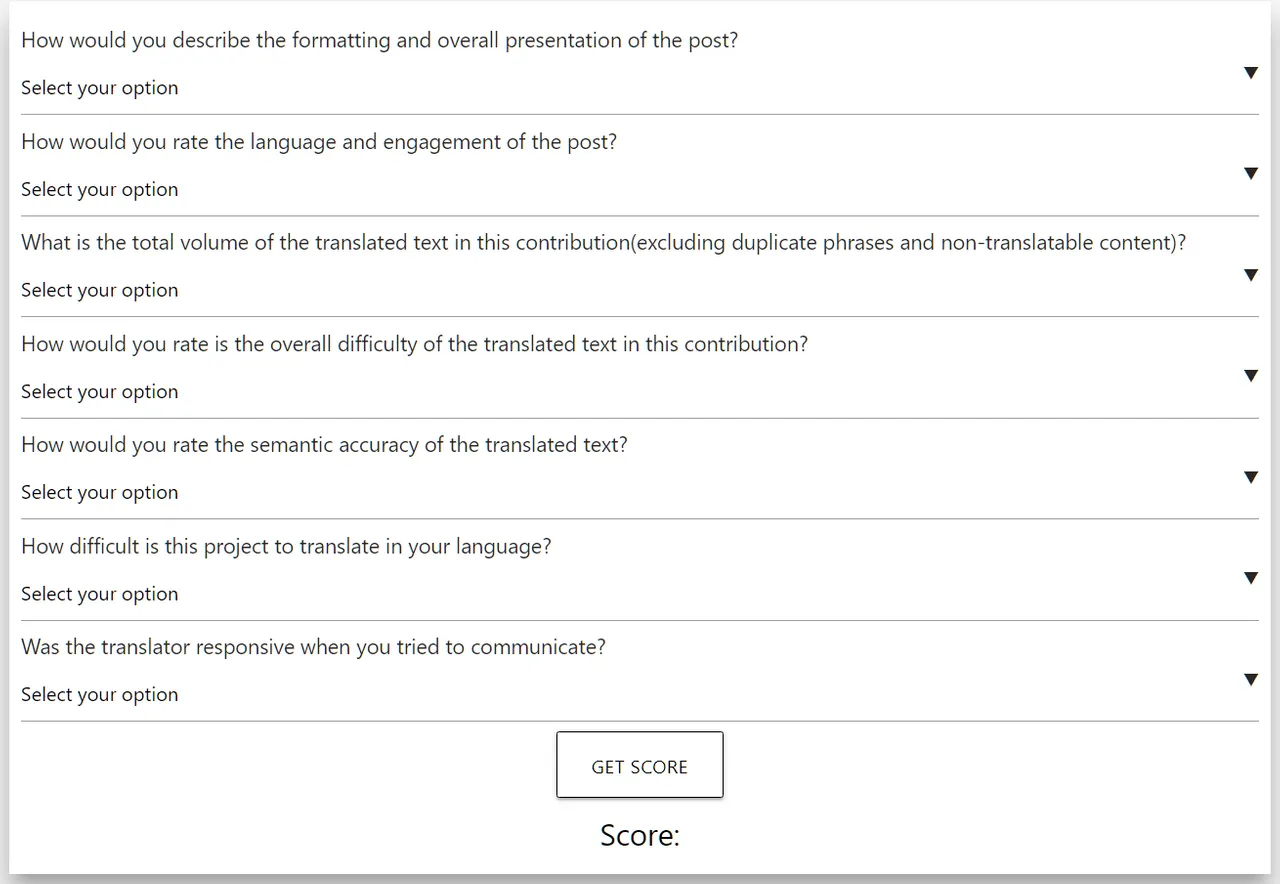

And this is the questionnaire with my proposals:

Instead of having a lot of different images here, I've taken the initiative to implement my proposals into a copy of the actual questionnaire, just to make it a little bit more of an interactive proposal. You can find it here together with the current one.

Please note: while I've modified some of the scores, I'm not an expert on that. My proposal is just for the Questions/Answers. The scores should be decided and changed by the @utopian-io team.

Benefits

The translations category is currently one of the biggest (if not the biggest) ones in the Utopian Ecosystem. By implementing part (or all) of my proposals, the questionnaire will be able to give a more accurate and fair distribution of incentives on a bigger amount of contributions.