At the end of August, @elear and the Utopian staff gave the Community Managers this awesome task of researching into the guidelines and metrics professionals use when they measure the quality of a piece of translation. The whole purpose is to upgrade the new guidelines in translation to professional quality level. Once the new guidelines are done, we can then work on the questionnaire to make the scoring as professional as possible to help our translators move forward to higher standards.

Two months have passed and this is what we have done for the Translation Category.

A. September - 2 Months of Research & Write Up

In September, I spent the entire month gathering up resources and researched into this topic on the internet.

In October, I started putting my research into document files so that together with the DaVinci Team, our Language Moderators and Translators can also take part in the discussion as we work out the new guidelines for Translation category.

Part 1 - What Metrics & Standards Professionals Use To evaluate Translation Quality

Part 2 - Four Standards Professionals Use To evaluate Translation Quality

Part 3 What Metrics Do professionals use to evaluate Translation?

B. October - Getting the Language Moderators Involved

Presently we have 101 Translators on board in the DaVinci Translation Teams. Among the 101 Translators, there are 25 Language Moderators who are actively doing the scoring everyday as they review the quality of the translation work.

Since we don't work in a vacuum, I suddenly saw the desperate need to call out the Language Moderators into the discussion. This was almost an impossible task in the beginning, but as I started talking to our moderators in the DM discord, I began to see the need for a better communication environment so that our language moderators can participate effectively. This is when all the interviews with the moderators began in October.

So we have been able to accomplish the following in October:

- Interviewing all the 25 Language Moderators patiently so that people can interact in a more personal way for effective communication.

- Getting the Moderators' feedback in the #Language Manager Room

- Some discussions started publicly among some of our moderators

We started moving forward in our discussions as different moderators started interacting in the channel. From there, we also have a more clear indication of the kind of problems and struggles we need to address more urgently.

Recently, the DaVinci team also created a specific channel** #ideas and suggestions for our moderators to write out their suggestions. In that way, we can have all the ideas in one place. We are now moving forward with these internal discussions to reworking the questionnaire for the translation category.

C. November - The urgent need to work on the Questionnaire

As we continue to work on the new guidelines, our aim is also to change the present questionnaire so that it can reflect professional metrics of evaluating a translation work.

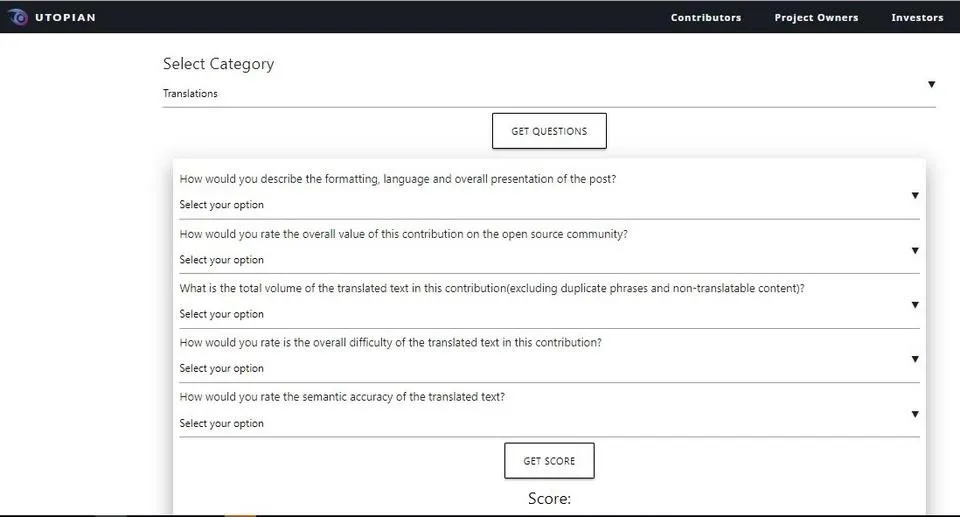

Presently, in our scoring review, the moderators need to answer these 5 questions.

Our moderators have been using these 5 questions to score the quality of the translation work.

We realize that there are weaknesses in these questions as the scoring does not always reflect the quality of the piece of translation, not to mention the fact that different moderators will view the questions differently leading to subjectivity in the final scoring.

In the coming days, the DaVinci team is going to work out a solution for this with the help of @Didic and final approval from @elear.

I have spent a lot of time to work out the proposal for the questionnaire as granular as possible, to make each point as specific and objective as possible.

D. Questionnaire Proposal: From Principles to Practice

As a Community Manager, I have researched into all the principles needed to evaluate a piece of translation and the measurable metrics in scoring the translation work. The metric is easy to implement and is an excellent step towards creating an objective and linguistic quality measure in the questionnaire scoring sheet.

In scoring a submission to translation category at Utopian, I propose that we consider the following 7 areas:

Quality of Translation Text - Accuracy is Paramount

Nine Categories to measure accuracyQuantity of Translation Text - Number of Words

Difficulty of Translation Text

Translator's Knowledge & Skill

Submission Post at Utopian

Team work with Language Moderator

Added Value to the Open Source Project

- Points 1, 2, 3 are related in the scoring of the actual translation work.

- Points 4, 5, 6, 7 are more specifically related to the translation production process.

1. Quality of Translation - Accuracy is Paramount

In our translation category, we aim for professional quality standards. Quality means providing a fluent translation that conveys the same meaning as the input document. It is not subjective at all in the evaluation.

I would say that 75% of the scoring should place in this #1 point.

A quality translation text free from error should fulfill the following:

- Correct information is translated from the source text to the target text.

- Appropriate choice of terminology, vocabulary, idiom, and register in the target language.

- Appropriate use of grammar, spelling, punctuation, and syntax in the target language.

- Accurate transfer of dates, names, and figures in the target language.

- Appropriate style of text in the target language.

Nine Categories to measure Quality

A high quality of translation should be completely accurate and totally error free.

To measure quality, we can use the following measurable metrics on Accuracy.

Moderators can note down errors made in the following 9 categories:

- Using a wrong term

- Conveying a wrong meaning in translation

- Omitted important words in the translation

- Structural errors in the sentences

- Syntactic Error - words are put incorrect order

- Grammatical errors - misusing parts of speech

- Misspelling

- Punctuation errors - misplacing punctuation can change meaning of text

- Style, Spacing and Formatting (specifically related to Crowdin like embedded codes, formatting of text, whitespace etc)

Points Given to Each Error Made

Moderators can note down the number of errors made in any of the above categories:

- For each error made, a point will be given.

- In this way, the quality of the translation will be graded by the number of errors made.

- The higher the number of errors, the lower the quality of the translation.

- The lower the number of errors, the higher the quality of the translation.

- If no errors are made, the translation is perfect and will receive a high score.

- Repetition of the same error will be considered as only '1' error point as the translator is not aware of the mistake.

Grading of Severity of Errors:

The grading depends on the volume of words translated. Let's use a 1000 word translation as an example:

- Excellent: 0 Error

- Very Good: 3 Errors

- Good: 6 Errors

- Bad: 9 Errors

- Poor: 12 Errors

If the translator makes 12 Errors in 1000 words, the translation is not rewarded at all. If the translator consistently makes 12 Errors in subsequent contributions, he is not qualified to continue on in translation team.

2. Quantity of Translation Text - Number of Words

Here are the measurable Metrics on Volume of Translation:

- Submission volume should be at least 1000 words for potential rewards

- Partial translations of less than 1000 words submitted should be cohesive and consistent with existing translations to other parts of the project

- Duplicated strings and texts cannot be considered for the count of words

- Translation of static texts that should not be translated like links, code and paths, will lead to a significantly lower score of a contribution, thus reducing its chances of receiving a reward.

Grading

- 1500+ words - Staff Pick - 5 points

- 1000 words - High score - 3 points

- Below 1000 words - For smaller projects / end of projects - Low Score - 1 point

I am hesitant to go beyond 1500 words, in case the contribution does not get rewarded (due to VP problem) even when the translation is of high quality. Also adding in extra words put a high burden on the LM who does not get rewarded for putting in extra work.

3. Difficulty of Translation Text

When it comes to the difficulty of the translation text, we need to look into 2 areas:

Levels of Difficulty of Translated Text

Highly Difficult: Legal & Medical text - there are specialized terms in legal and medical practices.

Difficult: Literary, Science and History text

Average: Technical manuals, School texts

Low: Short phrases and simple words

The above metrics is what we presently have in the questionnaire and also similar to what is used in the professional world. Only experts are qualified to translate legal and medical texts. Many of our projects are not related to legal and medical texts, therefore in the scoring of our present translation category, most scoring would lead to either #3 or #4.

For this reason, the final scoring comes out to be about the same at the moment, therefore, we need to make some changes in this area.

Levels of Difficulty of Projects

From the discussion among the moderators, there is a need to make a change here so that all moderators have the same consensus regarding the difficulties of projects.

Since we already have projects that are whitelisted, the DaVinci team and staff can pre-calculate the difficulties of the different projects in Crowdin.

They can rate the difficulties in 4 levels also in the following score points:

- Highly difficult - 5 points

- Difficult - 3 points

- Average - 2 points

- Low - 1 point

Right now, the difficulty of the project depends on the evaluation of different moderators. In order to have a fair evaluation across the board, the DaVinci team can come up with a table so that all moderators will use the same scoring regarding the difficulties of the translation text of the specific projects.

4. Translator's Knowledge & Skills

The translator's skills will be shown in the write up content of the post. This will show whether the translator is knowledgeable in his/her work.

- Does the translator make an effort to give an overview and explain the work of his translation in the submission post?

- Does the translator explain the tools he uses in translating difficult words, technical terms, etc?

- Does the translator explain the importance of the project that he is translating?

- Does the translator highlight examples of strings that need to special attention, eg. difficult terms, terminologies, language variants, slang, idioms, country standards, etc?

- Overall procedure & examples given: 5 points

- Little effort is made in the process: 2 points

- No effort is made: 0 point

5. Submission Post at Utopian

In order to receive potential reward at Utopian, scoring points will be given to post submission. This has to do with the overall presentation of the post formatting, language and the style of the translator. Scores will be given by how engaging the post is.

- Is the post inviting for people to read?

- Are there extra effort put into the post - videos, images, graphs, gifs etc.

- Style of the overall language in presentation - is it interesting? boring? exciting?

- Highly engaging (videos, gifs, interesting) - 5 points

- Little engagement - 2 points

- No engagement & boring - 0 points

6. Teamwork with Language Moderator

Translator needs to work in a team. Translators must follow the instruction of the LM of their team. The LM coordinate the translators and assign them projects to translate from the whitelist provided by the DaVinci team. Once assigned to a project, a translator cannot freely change project without the permission of the LM. Translators should not submit a new contribution if their previous one has not been reviewed by the LM.

- Does the translator respond to the LM within one day?

- Does the translator meet the deadline?

- Does the translator correct the mistakes?

- Is the translator communicative?

- Is the translator cooperative?

- Highly cooperative - 5 points

- Communicative but not cooperative - 3 points

- No response - 0 points

7. Added value to the Open Source Economy

Since the translation work is related to open source economy, it is good to add this question into the questionnaire.

Does the submission post include any added value content to the Open Source Economy?

Added value can be seen in giving:

- Tips

- Guides

- Conclusions

- Recommendations for translation

- Contacting project owner

- Add great value: 5 points

- Add some value: 3 points

- Add little value: 1 point

- Add no value: 0 points

Conclusion

We have a lot of discussions and suggestions in the DaVinci Discord Channel.

I started writing all this in the discord channel but it became too long. Actually I have already written part of this in the channel yesterday, but in order to keep all the thoughts and proposals in one place, I decided to write this post today to make it easier for everyone to read.

This post is a proposal of how we can move forward from the new guidelines to improving the questionnaire.

All the metrics in the questionnaire are put as objective as possible in evaluating the translation quality score.

We welcome all suggestions and feedback. Please comment below so that we can have everything in one place as the DaVinci team writes out the final questionnaire.

Blog Series on Measuring Quality Metrics for Translation Category

- Translation Category Part 1 - What Metrics & Standards Professionals Use To evaluate Translation Quality

- Translation Category Part 2 - Four Standards Professionals Use To evaluate Translation Quality

- Translation Category Part 3 - What Metrics Do Professionals Use To Evaluate Translation?

- Translation Questionnaire Part 4 - Quality Metrics: From Principles to Practice

Thank you for your attention,

Rosa

@rosatravels

Thank you for reading this post! If you like the post, please resteem and comment.

谢谢你的阅读!希望你喜欢。如果你喜欢我的分享, 请点赞并跟随我。

.