If this is your first time seeing this series, I recommend starting at the beginning.

NAS 2019 Build Series

- Building A New Nas

- NAS Build 2019 Step 1 - Ordering Parts

- NAS Build 2019 Step 2 - First parts delivery

- NAS Build 2019 Step 3 - Fan Upgrades

- NAS Build 2019 Step 4 - Power Supply Installation

- NAS Build 2019 Step 5 - Final Parts Delivery

- NAS Build 2019 Step 6 - CPU Installation

- NAS Build 2019 Step 7 - Hard Disks!

- NAS Build 2019 Step 8 - Firmware Updates

- NAS Build 2019 Step 9 - OS Install & Initial Testing

- NAS Build 2019 Step 10 - Introduction to ZFS

- NAS Build 2019 Step 11 - Some thoughts about the final build

- NAS Build 2019 Step 12 - Conclusion

This will be the final post in the series. I have been testing different final configurations on the NAS and using it for around a month. Everything has been working fantastic.

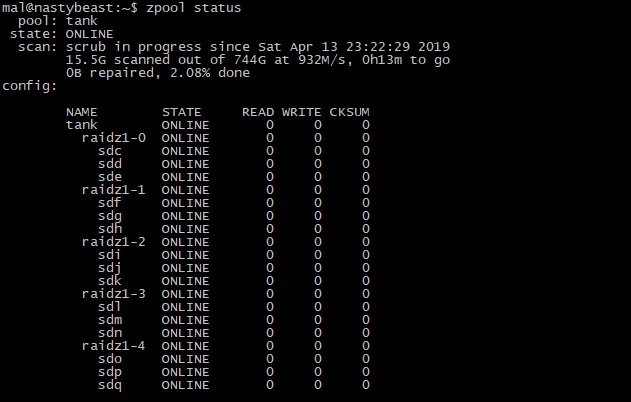

Final Raid Configuration

I stuck with using ZFS, which is no surprise as it was my primary goal. I really love ZFS and the benefits it offers. I recommend reading my Introduction to ZFS if you want under the benefits of ZFS.

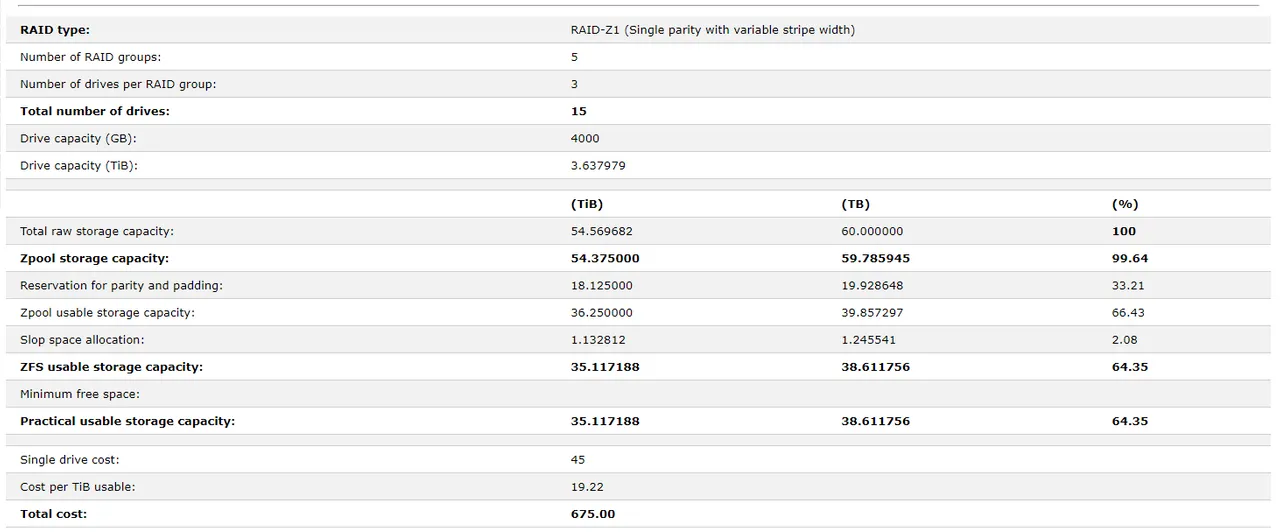

I finally decided on five Raidz (3 disks each). This is most similar to Raid 50 with 5 Stripes of 3 disks. This gives me a total usable storage size of 35TB after the parity & ZFS allocation costs.

There is a really cool ZFS calculator I didn't find until after I built the NAS and went to make this final post that does a good job of visualizing the configuration.

(open the image in a new tab to see clearly)

Speed was my primary concern, but I didn't want to sacrifice redundancy or have long rebuild times.

I put a lot of thought into the final configuration, and initially, 3 stripped 5 disk parity sets was my original plan. I did some testing with 7 stripped mirrors as well. The final configuration was a balance of the two, I get a little more speed and redundancy than the striped parity drives and a little more space than the mirrors.

I will be running virtual machines on this box, so this is generally a big no-no for parity raid due to very poor performance but I will be running the virtual machines off the raid 1 boot SSD.

Parity raid generally suffers from really poor write performance, but with the number of disks (15) I have and the five vdevs (stripes) performance is fantastic over a 10 Gbps connection. ZFS performs well with a large number of small vdevs.

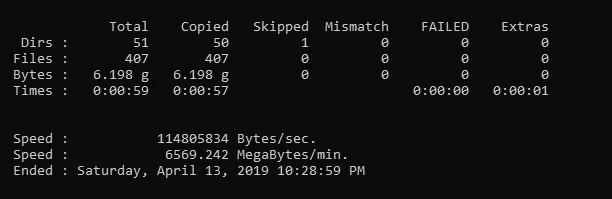

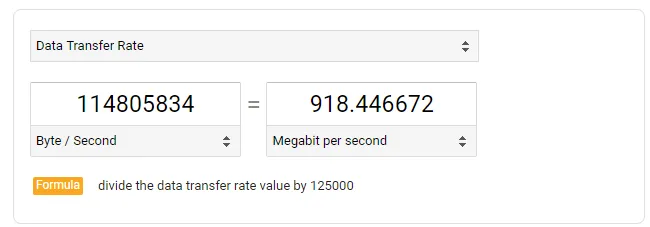

Performance

This was a robocopy synchronization to move data from my machine to the NAS. Meaning it was a write transaction, which will perform a lot worse than reading with a parity setup and it still can nearly saturate a 10 Gbps network interface. Random I/O will perform worse with parity than stripped mirrors but with five stripes it is still really good. If it does become a problem I have considered installing a PCIe adapter for NVMe drives and creating a ZIL SLOG device (write cache).

I do have two SSD drives in the box and I have tested using it as a write cache, but the performance would slow down sequential writes a lot and only benefit when doing random I/O. I would need to use NVMe drives to prevent performance loss on sequential writes.

Unfortunately, the motherboard doesn't support NVMe drives, but there is a solution.

Total cost to add two 500GB NVMe drives and a PCIe NVMe adapter is around $300. I don't think I need it right now but it is an option down the road. If I start to create a lot of the VMs, this would also be a large benefit. Ideally, I wouldn't write the write cache and VMs on the same disks as latency is critical for a write cache, but the minimum cost for a top of the line NVMe drive is pretty high. The above adapter supports four NVMe drives but would be overkill.

Software

I am vanilla Ubuntu 18.04 server using ZFS on Linux module which is available through the system package manager.

I am using SAMBA to share data with Microsoft Windows machines and QEMU/KVM for virtual machines. I also have Docker installed for Docker containers.

I have ZED (ZFS Event Daemon) installed for assist in monitor the ZFS file systems.

I am using Net Data for monitoring the server and getting an overview of what is going on. I run Net Data on all my witness nodes and full node. I wrote a post about Net Data a while ago and I know some witnesses have started to use it as a result.

I have a dedicated UPS which I still need to configure the agent so it will safely shut down the operating system in the event of power loss. ZFS is fantastic at handling abrupt power loss and usually will prevent the data loss associated with parity raid during power failures where the parity and data become out of sync.

Let me know below if you liked this series. I put a lot of time into it and wanted to document my progress building the ultimate NAS for around $1,700 USD.

I want to thank the JDM_WAAAT community for their ideas and hardware recommendations. I highly recommend you checking them out at https://www.serverbuilds.net/anniversary if you want to build something similar.